The way we consume music has completely altered in the past few decades. Digital streaming services such as Apple Music and Spotify are on the rise. With that, audio normalization has become mandatory.

But what does audio normalization mean? How can you normalize these digital audio files? This guide will talk about audio normalization, so keep reading.

What is Normalize Audio?

So, how to normalize audio? You’ll gain more from your audio file when you normalize your audio. The file will be on a specified volume level or target amplitude while preserving the overall dynamic range of your track.

What does normalize audio mean? Normalization is done to achieve the highest possible volume of a selected audio file. You can use it to gain more consistency in all your audio clips within an EP or an album.

Audio clips with larger dynamic ranges are a bit tough to normalize effectively. For example, peak amplitudes might become distorted or squashed during normalization.

Hence, each audio clip must be approached differently when you are looking to normalize it. The entire process of audio normalization is critical for any digital recording. Remember that you can’t just go for the one-size-fits-all technique here.

Why Should You Normalize Your Audio?

Now the question is, why is this normalization so important? Following are some scenarios where loudness normalization becomes critical;

Streaming service preparation

These streaming services tend to set a standard normalization level for all songs hosted in their libraries. Hence, you won’t have to drastically lower your volume or turn it up when switching your songs.

Each platform has a different audio target level, so there are other masters for different streaming platforms. Different loudness targets for different streaming platforms are;

- Amazon → -9 to -13 LUFs

- Apple Music → -16 LUFs

- CD → -9 LUFs

- Deezer → -14 to -16 LUFs

- SoundCloud → -8 to -13 LUFs

- Spotify → -14 LUFs

Every audio engineer has a different philosophy to determine what target level each master has. But you need to consider these standardizations as a guide.

Achieve The Max Volume

The process of audio normalization is used to gain maximum volume for each audio clip. It can be handy when importing clips into your audio editing program or making each audio clip louder.

Creating a Consistent Loudness Level Across Different Audio Clips

You can do audio normalization to keep all your audio clips at a relatively similar level. It’s specifically essential in different processes, such as gain staging. It’s a process where you tend to set different audio levels to prepare for your next processing stage.

You should normalize and edit your audio clips after completing your music projects, such as an EP or an album. Your recording atmosphere, as well as sound, should remain consistent. Therefore, you have to adjust your gain for all your songs.

Two Types of Normalization

There are different types of normalizations that can be used for different audio recordings and their use cases. In most cases, loudness and peak normalizations are used.

Peak Normalization

This normalization is a linear process, and the same gain is applicable across an audio signal to develop a peak amplitude of your audio clip.

The overall dynamic range will remain the same. The sound of the new audio clip will remain, by and large, the same outside the track. So, it will be a quieter or louder audio clip.

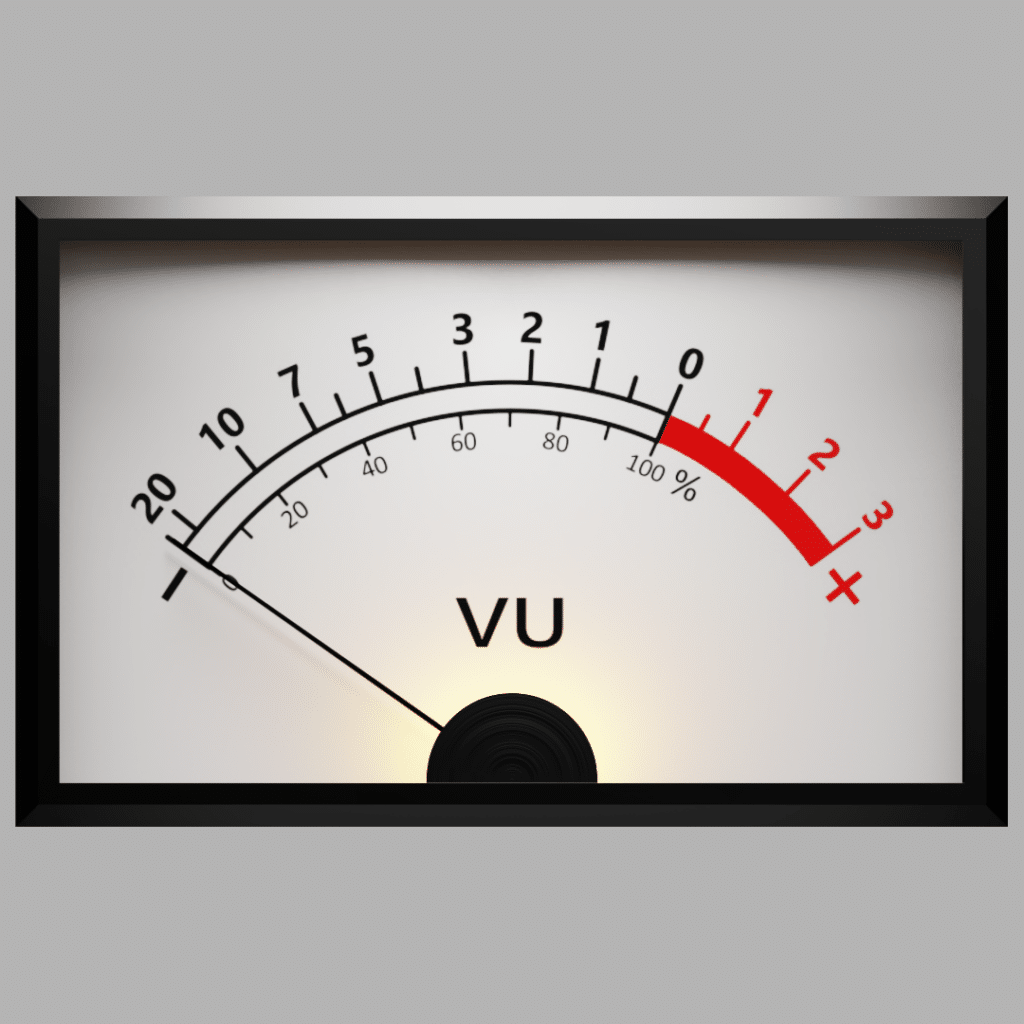

Peak normalization has an audio clip’s highest PCM (pulse code modulation) value. But the process takes place on the upper limit of digital audio and equates to a maximum peak @ 0 dB. So, it is effective on the peak levels and not the perceived volume of your audio clip.

Loudness Normalization

It’s a more complex normalization type and will also consider the human aspect of hearing. Our hearing takes care of different levels of amplitude and volume, as our ear has different subjective flaws in perception.

The processing will be around volume detection of EBU R 128. For example, sounds that can be sustained at the same volume level are those that you can play briefly and tend to sound louder.

So, you can put these sounds out at the same volume level, and your ears will perceive this sound to be louder. Therefore, this type of normalization considers these perceptual offsets.

Some people believe that louder sounds tend to be better. This is where these loudness wars came into existence in these streaming services. Musicians do their best to get peak volume to produce more sonically pleasing sounds.

This loudness war has ended with audio normalization, and you don’t have to listen to different volumes when switching between tracks.

This loudness normalization is gauged in LUFs, and it’s more true to human ears. Hence it serves as an audio standard in applications such as radio, TV, and other streaming services. And again, as in the case of peak normalization, 0 dBs have to remain the standard.

RMS Volume Detection

If you don’t use peak normalization, you will have to go for loudness normalization. There is another method of processing loudness in your audio clips, and that is with the help of RMS volume detection.

This process is very similar to the process of normalization using LUFs. Instead of using LUFs, this process emphasizes RMS levels. RMS stands for root mean square and measures the average volume of a section within a clip or the entire clip itself. Nevertheless, the LUF process is focused on peaks, and RMS doesn’t consider human listening.

That’s why sound engineers work on LUF normalization as a standard. Mastering volume is not just about creating those matching volumes throughout an audio clip. It helps in considering dynamics, human perception, and the balance between the two.

Normalization vs. Compression – The Difference

There is a misconception that compression and normalization are the same. Compression highlights the lowest volume within an audio clip and reduces those peaks. As a result, you will get an overall consistent volume level. Normalization makes the loudest point standard in an audio clip.

And from there, a proportional gain is applied to the rest of the clip. It helps preserve the clip’s dynamics and brings the perceived volume based on the peak level. Dynamics here refer to the difference between a clip’s softest and loudest sounds.

Audio Normalization Drawbacks

Now, you must consider that there are a few drawbacks of audio normalization as well. In most cases, you only do normalization in the final stages of your creation process.

You don’t need to normalize any individual audio clips that have to be mixed for multitrack recording. If you have normalized all individual components within your digital audio ceiling, they will clip when you play them together.

Normalization has some destructive traits. As you normalize, a digital process will integrate within an audio track. Hence, normalization is a clear place and time. So, you need to normalize only after processing your audio files to your taste.

Some other points to consider

In most cases, people use peak normalization on their audio clips to see the waveforms on their screens. It’s a bad idea, and your program should have the option to make those waveforms much bigger without permanently changing your audio file.

Apart from that, you can use virtual normalization within numerous media players when you’re matching volume levels for your finished tracks. The most common one is ReplayGain.

The aim here is to make different tracks of music play at the same time without altering the file. These programs work by gauging the EBU R 128 and RMS volume of different files and then deciding how much to tone down music in each file.

This might not be the perfect option, but it’s not a perfect option. Still, it’s interesting to hear different songs at the same level.

The Process of Audio Normalization

This section will showcase how to normalize audio using Ableton Live. You can use any other option, too, as all of them have their normalization capabilities integrated into theory controls.

Step 1 – Consolidating your track

To convert your audio clips into a clean track, you have to select your audio track and then right-click and select “Consolidate” from the pop-up menu. You can press Ctrl/Cmd + J to consolidate a track quickly.

Step 2 – Disabling Warp and Reset Gain to 0 dB

The next step is to go to the sample clip, disable Warp, and reset gain to 0 dB. You can reset the gain by double-clicking on that arrow.

Step 3 – Checking against the original file

Now, you have normalized your clip and can check the levels by comparing them to the original audio clip. You are doing this to ensure the files have been processed correctly.

When Should You Use Normalization?

By modern standards, you might notice that normalization seems to be a pretty old-fashioned technique. There are several other less invasive methods to increase the gain of your track. Therefore, why would you use normalization?

Some applications are pretty old and came from the earlier days of digital audio. Many components had limited signal-to-noise ratio and dynamic range performance during that time. Normalizing audio can help in getting the best results from those primitive DA/AD converters.

However, normalization is a pretty common feature on different hardware samplers. These hardware samplers help in equalizing the volume of various samples.

So, the signal-to-noise ratio and dynamic range will remain the same. You can use your snapper’s normalization function for building presets and patches.

When Not to Normalize?

Remember that the normalization process is not used in every music production process that has nothing to do with sampling. It is convenient to bring up a track to a good volume. However, there are various reasons why other methods are better.

Normalization might be good, but it can be destructive. When you create a new file, you will have to commit to the changes that you have made. There is no way to go back, so if you normalize and delete the original file, you will be stuck with the normalized version you can’t edit.

As we have mentioned, there are other ways to normalize your audio clips since this process is a consistent gain change, and it works just like other level adjustment procedures. So, before trying out the normalization technique, you should try another method that works for you.

The process of normalization can create various inter-sampling peaks. More and more producers are looking to make their tracks louder. Normalization is the worst option you can go for when raising the level is needed on your entire tracks.

It’s better to go for gain staging. It involves checking the volume of each other elements that you record and making sure that none of them exceeds a certain level throughout the mix.

The rule of thumb here is to keep the peaks in your audio clips around 9 to 10 dBFS. The body of your waveform should be around -18 dBFS.

“…your program should have the option to make those waveforms much bigger without permanently changing your audio file.

Source: https://primesound.org/normalize-audio…”

Ableton Live does not have this option. What are Ableton Live users options to make the waveforms of quieter recordings more visible? Thank you for a well written article.